Colorizing black and white Image using Convolution Neural Networks (Without GANs)

Contents :

- Overview.

- The Data and Processing.

- Loss Function.

- Loading Data

- Utility Callback

- Model Architecture

- Results

- Test Output

- Code Link

- Future Work

- Reference

1 — Overview: Image colorization is the process of assigning colors to a black and white (grayscale) image to make it more aesthetically appealing and perceptually meaningful. These are recognized as sophisticated tasks than often require prior knowledge of image content and manual adjustments to achieve artifact-free quality. Also, since objects can have different colors, there are many possible ways to assign colors to pixels in an image, which means there is no unique solution to this problem.

Nowadays, image colorization is usually done by hand in Photoshop. Many institutions use image colorization services for assigning colors to grayscale historic images. There is also for colorization purposes in the documentation image. However, using Photoshop for this purpose requires more energy, time and skill. One solution to this problem is to use machine learning / deep learning techniques.

Image colorization using Machine Learning is an interesting task ofcourse it can be done using GANs but to make this piece of work more interesting and test out the boundaries of Deep Learning we will not use GANs.

As we know that GANs requires alot of GPU memory so some us of might not be able to replicate this task. So we will stick to non-GAN approach.

2 — The Data and Processing: We will use flower dataset contains 25999 images.

Data Link : https://www.kaggle.com/datasets/ismailsiddiqui011/colorizer-data

For preprocessing part we will resize the image into 256x256 size and Min_Max scale it between 0–1. Then we will split the channels into 3 part R, G and B this will become our ‘Y’ and for X that will be our grayscale image. You might be wondering why split the channel rather than pass all channel to the model ? The reason is that is that the RGB channel needs balance between them to create a proper color like to create a color we need nearly 50% Red, 50% Green and 0% Blue.

To rebuild the image from R, G and B channel we simply concatenate them on channels.

3 — Loss Function: As we said we need a proper balance between RGB channel we will apply loss function on each output.

- Mean Absolute Error (MAE): The mean absolute error of a model with respect to a test set is the mean of the absolute values of the individual prediction errors on over all instances in the test set. Mathematically:

4 — Loading Data: To work with images first we need to load the images, to do so we will do it using the custom Image Data Generator:

- Data Generator: To make our work easy we can use predefined functions from TensorFlow/Keras, we just give the dataframe containing image path. The following function will take random sample, load the images, randomly apply augmentation to input image and split the channels.

5 — Utility Callback: To visualize our training process we will display the input, ground truth and prediction image, using custom callback.

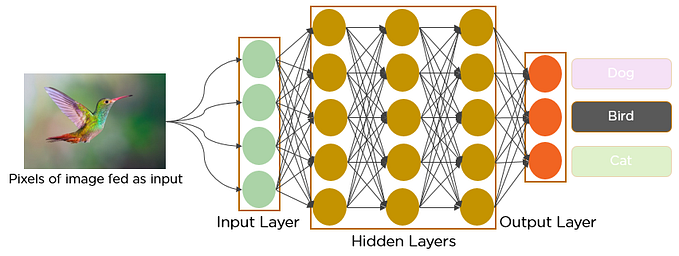

6 — Model Architecture: We have defined a custom model architecture inspired from Residual Network and Dense Network. The intuitive idea is that we take a pretrained network like VGG19 and get the embedding at various vital blocks of this pretrained model. Then connect some Convolution layers with previous blocks in residual connections and keep on forwarding it next layer.

7 — Results: Training the model for 135 epochs we got the pretty good results —

8 — Test Output: Here are some sample outputs on test data —

9 — Code Link: Link

10 — Future Work:

- Experiment with more complex encoder like Vision Transformer.

- More and mixed domain data.

- Build more advance model to colorize old black and white videos/movies.

11 — Reference:

- ARVIX — https://arxiv.org/

- Papers With Code — https://paperswithcode.com/